Hikimaging multi-color fluorescence for lymphadenectomy in gynecological cancer

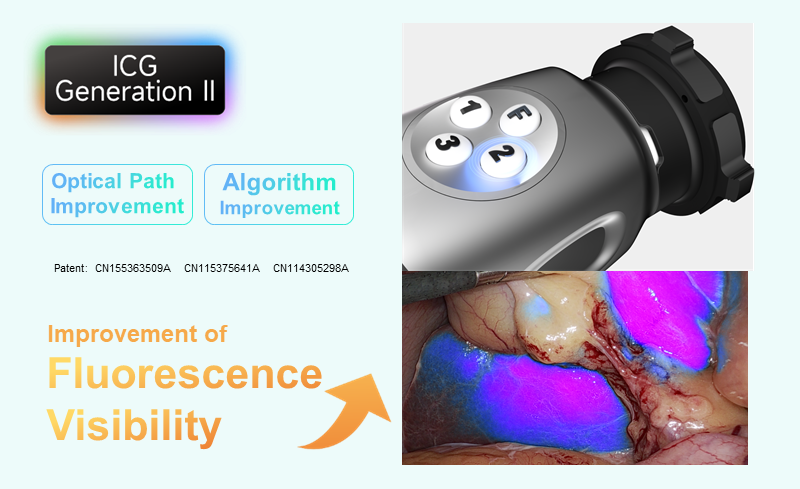

Lymphadenectomy in gynecological cancer has long faced the challenge of accurate identification and complete removal. Micro-metastases, anatomical variations, and the hidden nature of deep lymph nodes make it very easy for traditional methods that rely on palpation and visual inspection to miss diseased tissues, affecting patient prognosis. Fluorescence imaging technology improves the accuracy and safety of lymphadenectomy through real-time visualization. Green fluorescence: real-time imaging, rapid targeting of target lymph nodes Doctors do not need to rely on preoperative imaging memory or repeated palpation. The fluorescent screen directly displays the location of the target lymph nodes. Especially for obese patients or superficial lymph nodes, the green fluorescence can quickly guide the surgeon to the target, shorten the operation time, and clearly mark the lymph node boundaries to reduce misoperation of surrounding normal blood vessels and nerves. https://www.hikimaging.com/wp-content/uploads/2025/07/1.绿色荧光:快速锁定淋巴结位置-2.mp4 White and multi-color fluorescence switching: Targeting deep lymph nodes Accurate identification of deep pelvic lymph nodes (such as obturator foramen and iliac vessels) is a difficult point in gynecological cancer surgery. Huiying’s white light and multi-color fluorescence switching function can clearly “light up” the previously hidden lymph nodes, avoiding missing key lesions. https://www.hikimaging.com/wp-content/uploads/2025/07/2.白光和多色荧光切换:锁定深处淋巴结.mp4 Multi-color fluorescence: Assists in complete removal of lymph nodes By observing the continuity and integrity of the fluorescent signal, the surgeon can intuitively determine whether the lymph node capsule is destroyed, whether satellite lesions exist, and whether the lymphatic drainage area has been cleared thoroughly enough to minimize the risk of residual tiny lesions. https://www.hikimaging.com/wp-content/uploads/2025/07/3.多色荧光:助力完整切除淋巴结-2.mp4 Hikimaging has launched a series of fluorescent products such as 4K fluorescent and 3D fluorescent, which greatly improve the development effect through algorithms. At the same time, the self-developed optical mount effectively suppresses stray light interference and significantly improves the problem of fluorescent light leakage. DownLoad

CN

CN